There are many SEO best practices. Consider, for example, the fact that Google has at least 200 ranking factors influencing its search algorithm. A significant portion of these factors falls under the categories of “on-page” and “off-page” SEO. Think expert content, high-domain authority links, and suggestive metadata. However, doing exactly what Google wants in these realms is somewhat of a black box and that’s a problem for anyone who cares about aligning their websites with search engines.

There is one area where they have actually been quite public and explicit about the directions they want websites to follow: technical SEO. And it makes sense. If you think about it, Google’s business model for search is predicated on crawling and indexing the internet in a timely manner to make the world’s information universally accessible and useful. This mission is compromised when they encounter a critical mass of websites that are difficult to crawl and index. That’s why they have been so public about certain technical SEO initiatives.

The two elements highlighted in this post are dynamic rendering and structured data markup. We will talk about what they accomplish, why Google endorses them, how they are beneficial to your organic channel, and the ways you can incorporate them into a future-proofed SEO framework I like to call “Google’s Perfect World”. Let’s get started.

Technical SEO Initiative #1: Dynamic Rendering

Context – an internet that is difficult to efficiently crawl and index

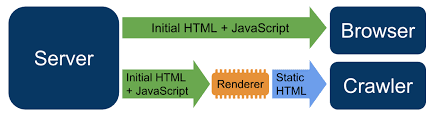

The internet is growing more complex every day, at the expense of Google’s aforementioned mission of organizing and serving useful information to people as quickly as possible. The growth of Javascript-powered websites is one example of this increasing complexity of the internet. These websites command significant resources from Google that force crawling and indexing to be performed in a two-wave process. First, HTML and CSS are rendered. Then, these websites are put in a queue for the dynamic content to be rendered as resources become available – sometimes days or weeks later. As you can imagine, this is a resource-intensive process for Google, but it is also extremely problematic for websites (news organizations come to mind) that need their content marketing efforts indexed frequently.

Solution – serving a version of your website that Google can easily crawl and index

If you are an SEO nerd or a fan of the Google I/O conference, you may have noticed that their developers dedicated an entire session in the 2018 event to a ground-breaking solution to this problem. It’s called dynamic rendering and it could be one of the most important initiatives they have endorsed in the last decade.

In the simplest terms, dynamic rendering is the process of serving separate versions of your website, depending on what calls it. You can have a version optimized for the human experience and you can have a version optimized for the robot experience. Currently, this is the antidote for all the Javascript-powered websites out there that worry whether their content marketing efforts are being thoroughly indexed in a timely manner, if at all! In the robot-optimized version, for example, you can strip out all of the dynamic content so there is no need for a second wave of crawling and indexing.

Benefits – the perfect crawling experience for Google

There are numerous benefits of utilizing dynamic rendering.

- First, dynamic rendering nullifies the age-old SEO debate of whether to prioritize optimization for the human experience or the robot experience. Now, you do not have to choose – you can do both without compromise.

- Second, dynamic rendering offers the peace of mind that all of your content marketing efforts are seeing the light of day on relevant SERPs. Google is able to load pages faster, see more information, and ultimately, index more content in a much shorter timespan.

- Third, dynamic rendering sets the stage for a much larger technical SEO framework I like to call “Google’s Perfect World” that incorporates other best practices like structured data markup and edge delivery of content.

Technical SEO Initiative #2: Structured Data Markup

Context – Google needs help understanding content

Google’s Perfect World is an answer to the following question: what would a website look like if it were optimized for the perfect crawling and indexing experience? In this world, websites are built in flat HTML, contain world-class structured data markup, and have pages that load instantly. Dynamic rendering elegantly serves the purpose of this perfect world, but not all websites on the internet have a lot of dynamic content. Some only need to satisfy certain elements of this perfect world. A universal for all websites is the use of structured data markup.

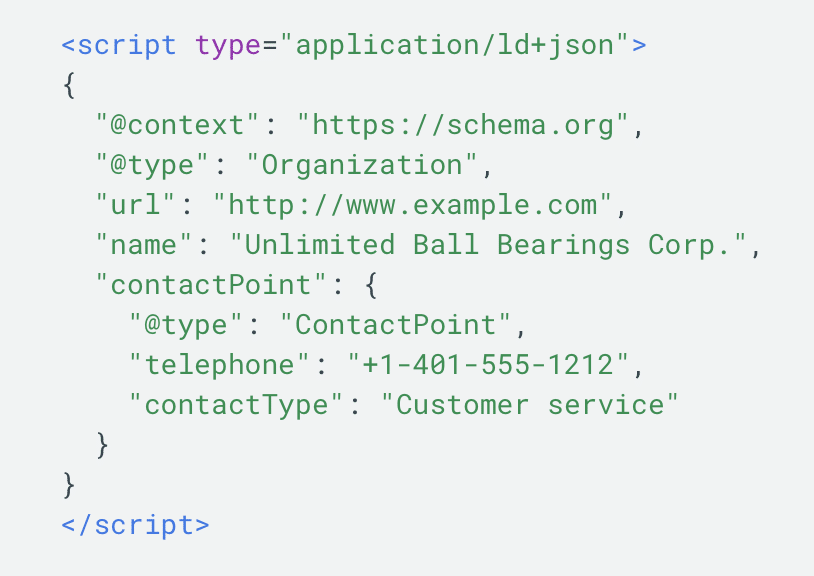

Solution – speak the language of Google

Structured data is the language of search engines. It helps them contextualize and understand content so they know what they are crawling and can more accurately match it to relevant search queries. Google’s search engine is a machine, which of course lacks the natural language processing capabilities humans take for granted. Structured data gives search engines explicit clues about the nature of the content that is being crawled. For example, markup can tell them that what they are crawling is a recipe, or a software product, or a frequently asked questions section about some business service. The list goes on. Google endorsed this initiative in 2014, as did all of the other major search engines, for the purpose of creating this clarity while crawling and indexing the internet.

Example of structured data in the Google-preferred JSON-LD format.

Benefits – improved search appearance

There are numerous SEO benefits of utilizing structured data markup.

- The first benefit is a possible increase in the number of ranking keywords that matter most to your business. When search engines understand your content better, it follows that they will do a much better job of matching it with the queries of your target audience.

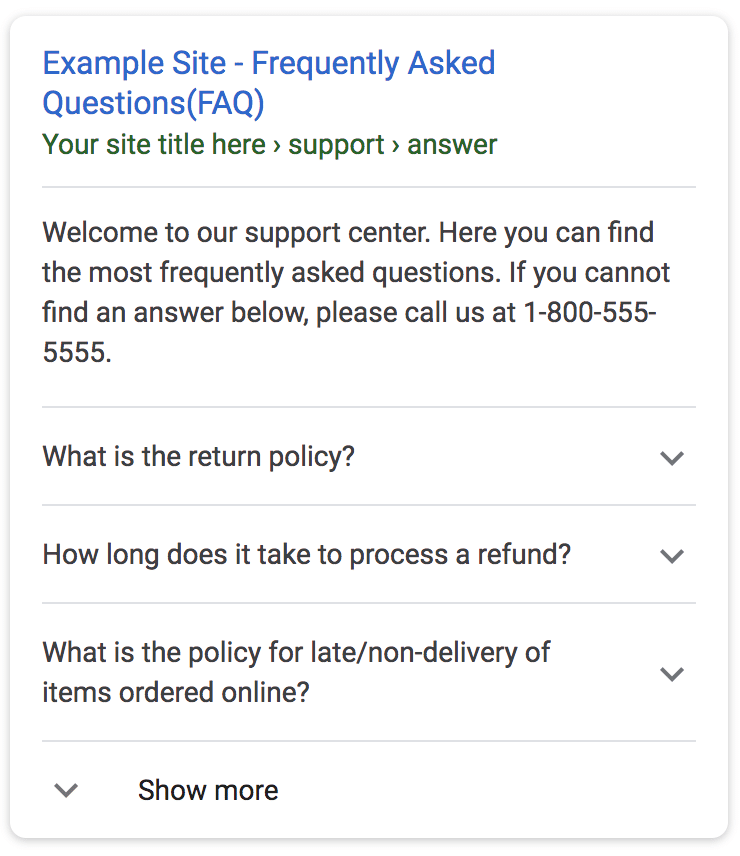

- Second, structured data qualifies your content for a new organic search experience called “rich results” which enhances the appearance of your standard blue links with features like ratings, reviews, how-tos, FAQs, and videos. In total, there are over 30 features you can qualify for that improve your visibility, increase SERP real estate, and generally entice more searchers to click-through to your website.

- Third, structured data future-proofs your content for the voice and audio revolution in search. Smart speakers, for example, are being utilized more and more and the functionalities of these devices are powered by structured data. As people become more comfortable with doing voice searches, your content will be out ahead and already optimized for their commands and associated actions.

Example of FAQ rich results powered by structured data.

Conclusion – The Future of SEO is Technical SEO

We often turn to tactics like content creation and link building in order to move the need in organic search. But as the internet has grown more complex and websites have had more opportunities to engage on a regular basis with a variety of robots, technical SEO has become a fundamental lever for driving performance too. As I have shown here, by creating the perfect crawling experience for Search Bots via dynamic rendering and structured data markup, you can improve the indexation of your content marketing and qualify for rich features that enhance your appearance in search results. The makeup of this perfect world and it’s associated use cases are growing as well. In addition to the initiatives, I elaborated on in this post, pay close attention to other nascent subsets of the field, like edge SEO. If you can create a website that communicates quickly and with clarity to robots, you will be well ahead of most of the internet. Technical SEO will quickly become a competitive advantage. Thank you for reading!